The Lab has recently released two new project viewers, the VLC Media Plugin viewer,and the Visual Outfits Browser viewer.

The Lab has recently released two new project viewers, the VLC Media Plugin viewer,and the Visual Outfits Browser viewer.

As they are both project viewers, they are not in the viewer release channel, and must be manually downloaded and installed via the Lab’s Alternate Viewers wiki page. Also, as they are project viewers, they are subject to change (including change based on feedback), and may be buggy.

The following notes are intended to provide a brief overview of both. Should you decide to download and test either, please do file JIRAs against any reproduceable issues / bugs with them, please do file a JIRA, giving as much information, including the info from Help > About Second Life and any log files which you feel may be relevant.

Visual Outfits Browser

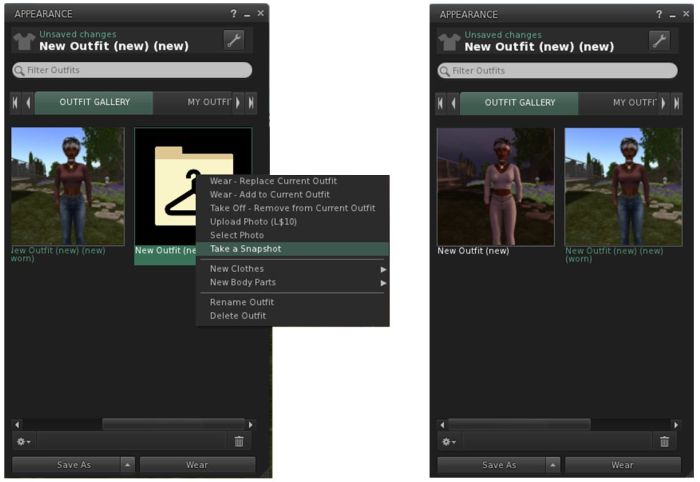

The Visual Outfits Browser (VOB) viewer, version 4.0.6.316123, appeared on Monday, June 6th. Simply put, it allows you to use the Appearance floater to capture / upload / select images of your outfits and save them against the outfits in a new Outfit Gallery tab within the floater.

The new Outfits Gallery tab (right-click your avatar > select My Appearance > Outfits Gallery) should display all of your created outfits as a series of folder icons, each one displaying the name of the outfit beneath it. You can replace these icons with an image of the outfit in one of three ways:

- You can wear the outfit, then right-click on its associated folder icon and select Take a Snapshot (shown above left). This will open the snapshot floater with save to inventory selected by default, allowing you to photograph yourself wearing the outfit and upload the image to SL, where it automatically replaces the folder icon for the outfit

- You can use Upload Photo to upload an image of the outfit your previously saved to your hard drive, and have it replace the folder icon

- You can use Select Photo to select an image previously saved to your inventory, and use that to replace the folder icon for the outfit.

When using the capability there are a number of points to keep in mind:

- Both the Take a Snapshot and the Upload Photo options will incur the L$10 upload fee, with the images themselves saved in your Textures folder

- In all three cases, link to the original images are placed in the outfit folder

- This approach only works for outfits you’ve created using the Appearance floater / the Outfits tab. It doesn’t work for any other folders where you might have outfits – such is the Clothing folder.

How useful people find this is open to debate; I actually don’t use the Outfits capability in the viewer as I find it clumsy and inefficient for my needs. However, it would seem that pointing people towards the appearance floater in order to preview outfits, when most of us tend to work from within our inventories, would seem to be somewhat counter-intuitive.

As such, it’s hard to fathom why the Lab didn’t elect to include something akin to Catznip’s texture preview capability within the VOB functionality. This allows a user to open their Inventory and simply hover their mouse over a texture / image to generate a preview of it (as seen on the right).

Offering a similar capability within the VOB viewer would, I’d suggest, offer a far more elegant and flexible means of using the new capability than is currently the case*. Users would have the choice of previewing outfits either via the Outfits Gallery tab in the Appearance floater or from within Inventory.

There are also a number of wardrobe systems available through the Marketplace. While these may require RLV functionality and come at a price, they may still be seen as offering a more flexible approach to managing and previewing outfits. As such, it will be interesting to see how the VOB capabilities are received by those with very large outfit wardrobes.

VLC Media Plugin Viewer

As Apple recently announced they are no longer supporting QuickTime for Windows and will not be offering security updates for it, going forwards, the Lab is looking to remove all reliance on the QuickTime media plugin, which is used to play back media type likes MP3, MPEG-4 and MOV, from its viewer, and replace it with LibVLC (https://wiki.videolan.org/LibVLC/).

This project viewer – version 4.0.6.316087 at the time of writing – replaces QuickTime with LibVLC support for the Windows version of the viewer only. The OS X viewer is currently unchanged, as Apple are continuing to support QuickTime on that OS. However, the Lab note that they will eventually also move the OS X version of the viewer to use LibVLC as their 64-bit versions of the viewer start to appear, as the QuickTime APIs are Carbon and not available as 64bit.

*I’ve been informed, and hadn’t appreciated, that this approach can be graphics memory intensive – see FIRE-933.