Update, September 8th: The unified snapshot floater is now a part of the de facto release viewer.

Update, September 8th: The unified snapshot floater is now a part of the de facto release viewer.

Update, August 26th: The unified snapshot floater is now available in a release candidate viewer, version 3.7.15.293376.

Niran V Dean is familiar to many as the creator of the Black Dragon viewer, and before that, Niran’s Viewer. Both viewers have been innovative in their approach to UI design and presentation, and both have been the subject of reviews in this blog over the years, with Black Dragon still reviewed as and when versions are released.

Once of the UI updates Niran recently implemented in Black Dragon was a more unified approach to the various picture-taking floaters which are becoming increasing available across many viewers. There’s the original snapshot floater, and there are the Twitter, Flickr and Facebook floaters offered through the Lab’s SL Share updates to the official viewer, which are now also available in a number of TPVs.

In Black Dragon, Niran redesigned the basic snapshot floater, offering a much improved preview screen and buttons which not only provide access to the familiar Save to Disk, Save to Inventory, etc., options, but which also provide access to the Flickr, Twitter, and Facebook panels as well.

He also submitted to the code to Linden Lab, who have approved it, and it is currently working its way through their QA and testing cycle and should be appearing in a flavour of the official viewer soon (see STORM-2040).

A test build of the viewer with the new, more unified approach is available, and I took it for a quick spin to try-out the snapshot-related changes. Note it is a work-in-progress so some things may yet be subject to change between now and release.

First off, the snapshot floater is still accessed via the familiar Snapshot button, so there’s no loking for a new label or icon. The Twitter, Flickr and Facebook floaters and buttons are also still available (so if one or other of them is your preferred method of taking pictures, you can still open them without having to worry about going an extra step or two through the snapshot floater).

Opening the new snapshot floater immediately reveals the extent of Niran’s overhaul – and as with Black Dragon, I like it a lot.

The increased size of the preview panel is immediately apparent, and might at first seem very obtrusive. However, when not required, it can be nicely hidden away by clicking the << on the top left of the floater next to the Refresh button, allowing a more unobstructed in-world view when framing an image (you can also still minimise the floater if you prefer).

Beneath the Refresh button are the familiar snapshot floater options to include the interface and HUDs in a snapshot, the colour drop down, etc., and – importantly – the SL Share 2 filter drop down for post-processing images. The placing of the latter is important, as it is the first clue that filters can, with this update, be applied to snaps saved to inventory or disk or e-mailed or – as is liable to prove popular – uploaded to the profile feed.

Below these options are the familiar buttons allowing you to save a snapshot to disk, inventory, your feed or to e-mail it to someone. click each of these opens their individual options, which overwrite the buttons themselves – to return to them, simply click the Cancel button. Saving a snapshot will refresh the buttons automatically.

Within these buttons are those for uploading to Flickr, Twitter or Facebook. These buttons work slightly differently, as clicking any one of them will close the snapshot floater and open the required application upload floater.

While this may seem inconvenient over having everything in the one floater, it actually makes sense. For one thing, trying to re-code everything into an all-in-one floater would be a fairly non-trivial task; particularly as Twitter, Flickr and Facebook have their own individual authentication requirements and individual upload options (such as sending a text message with a picture uploaded to Twitter, and the ability to check your friends on Facebook. Also, and as mentioned earlier, keeping the floaters for Flickr, Twitter and Facebook separate means they can continue to be accessed directly by people who use them in preference to the snapshot floater.

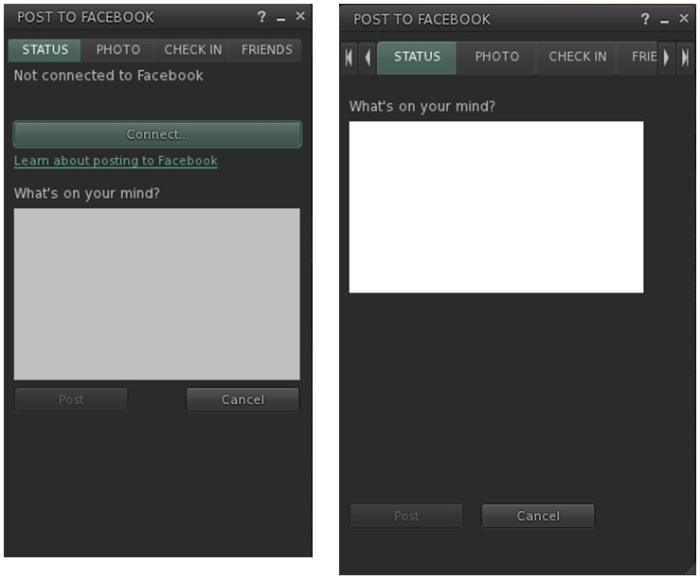

However, this latter point doesn’t mean they’ve been left untouched. Niran has cleaned-up much of their respective layouts and in doing so has reduced their screen footprints. The results are three floaters that are all rather more pleasing to the eye.

All told, these are a sweet set of updates which make a lot of sense. It may be a while longer before they surface in a viewer; I assume they’ll likely appear in a snowstorm update, rather than a dedicated viewer of their own, but that’s just my guess. Either way, they’re something to look forward to,

Kudos to Niran for the work in putting this together, and to Oz and the Lab for taking the code on and adding it to the viewer.