On Monday October 6th, Designing Worlds, hosted by Saffia Widdershins and Elrik Merlin, broadcast a special celebratory edition, marking the show’s 250th edition, both as Designing Worlds and its earlier incarnation, Meta Makeover. To mark the event, the show featured a very special guest: Linden Lab’s CEO, Ebbe Altberg.

The following is a transcript of the interview, produced for those who would prefer to read what was said, either independently of, or alongside, the video recording, which is embedded below. As with all such transcripts in this blog, when reading, please note that while every effort has been made to encompass the core discussion, to assist in readability and maintain the flow of conversation, not all asides, jokes, interruptions, etc., have been included in the text presented here. If there are any sizeable gaps in comments from a speaker which resulted from asides, repetition, or where a speaker started to make a comment and then re-phrased what they were saying, etc, these are indicated by the use of “…”

The transcript picks-up at the 02:25 minute mark, after the initial opening comments.

0:02:25 – Ebbe Altberg (EA): Hi, thank you. Thank you for having me on this very special occasion of yours, and ours. 250, that amazing! It’s incredible, incredible; I’m very honoured to be here.

Saffia Widdershins (SW): Well, we’re very honoured to have you here … Now, you’ve been in the job for around nine months now.

EA: Yes, since February, I think. Yeah.

0:02:59 SW: Is it what you were expecting, or how has it proved different?

EA: It’s fairly close to what I expected, because I’ve had a long history of knowing Second life, even from the beginning. So Second Life, the product, was not a mystery to me. Obviously, as you dig in and look under the hood, you see some things that you wouldn’t have expected; and some of the other products in Linden Lab’s portfolio were maybe a little bit surprising to me, but we’re getting that cleaned-up. But with regards to Second Life, it is not too much of a mystery, as I’ve been following it so closely since way back in the beginning … So it felt very natural and quite easy for me to come on-board and figure out where to take things.

0:03:59 – Erik Merlin (EM): And keeping this next question as general as possible: is there anything that’s been a pleasant or unpleasant surprise?

EA: Not that many unpleasant surprises; well, it was a little unpleasant how far we had managed to disconnect ourselves from the community and our customers and residents. So that was a bit shocking to me, because I had missed that part of the history. I remember the beginning of the history, where there was a very close, collaborative relationship between the Lindens and the residents. so that was a bit shocking to me, that … some effort had to be put in to try to restore some of those relations and some of the processes that had introduced here that we had to reverse. You know, the fact that Lindens couldn’t be in-world [using their Linden account] and stuff like that. So that was a little strange to me and unfortunate.

Positives? There are many. There are so many talented people here, so that’s been a lot of fun to get to know people here. some people have been here for a very long time; some absolutely incredible people have been here for over ten years working for Linden, so just getting to recognise what incredible talent we have here has been a positive … it was a little bit low-energy when I first came here, which was a little bit unfortunate, but I think we’ve come quite a bit further, and so the energy today in the office and amongst people working here has gone up quite a bit, so I’m very pleased with that.

0:06:03 SW: That’s brilliant. Have there been any stand-out “wow!” moments when you’ve come in-world and seen something and gone “wow!”?

EA: The “wows” for me may be less visual – I think we could do better with that in the future – but just the communities, and the types of creations and how people collaborate to make these things happen. the variety of subject matter and the variety of things that Second Life helps people to accomplish, whether it is games or education or art – its just incredible, the variety. And also the interactions with people are wild moments, where I can just drop-in somewhere and just start chatting with people, and that’s always a lot of fun and creates “wow!” experiences for me.

So the fact that this is all user-generated, in some ways that just wows me every day. It’s incredible that we can enable all these things to happen. But I’m certainly hoping we can get to a point where it’s more of a visual “wow!” in the future.

0:07:32 SW: I’ve been at the Home and Garden Expo this week … and there’s certainly some things there that are stunning examples of what creators are working on at the moment.

EA: Yeah. It’s taking everybody a while. A lot of new technologies have been introduced, and we’re still trying to make adjustments and fixes and improvement s in some of those things. But as more and more creators figure-out how to take advantage of these things, whether it’s mesh or experience keys and all kinds of stuff that just creating a new wave of different types of content and experiences, it’s fun to watch happen. It’s a lot of fun to be able to enable and empower people that way.

0:08:33 SW: We wanted to talk a little bit about the new user experience.

EM: Ah yes, and talking to different people working with new users, both English and Japanese speakers, interestingly enough, both have talked about problems with the new mesh avatars … One of the first things that people enjoy when they first come to Second Life is [to] customise their appearance, but the mesh avatars don’t really allow this, or they don’t allow it easily. Is there something that can be done about that?

EA: I don’t have a specific list of good things there; the team is working on making improvements to the avatars, from little things that we might see as bugs, and also trying to solve the “dead face” , get some eyes and mouths [to] start moving. But some of the clothing issues is probably also issues with the complexity of understanding what things can I shop for that are going to be compatible with what types of avatars and all that. Some of it is hard to tell with how much of it is complications with the transition… or the fact that you have two different ways of doing things happening simultaneously; we’re sort-of in this transitional period where you can obviously still go back to using any of the previous avatars, those are still all there. But we wanted to push ahead with what we figure is where the future is going to take us, and there’s probably some growing pains in doing that; but other time, this is where it is going to go.

So we just have to try to understand the bugs and the complexities and react to is as fast as we can. but I don’t, off the top of my head, have a list of known issues that we’re fixing with regards to the complexities around avatars, other than the stuff with getting the face to wake up. but I can look into that for a follow-up later on, but right now I don’t have anything right off the top of my head.

0:10:50 SW: As someone who directs dramas like The Blackened Mirror, we’ve long said that we would give anything for the ability to raise a single eyebrow …

EA: Yeah … ultimately over time, as [real world] cameras improve, if you’re willing to be in front of a camera, there are things you can obviously do to really transmit your real-world facial expressions onto your avatar, and we’re going to look at that further out. That’s not something we’re actively working on right now; but there’s certainly other companies, including HiFi that are looking at that, and we know companies that have already proprieted the technology behind it that we could license and do some of those things.

But there are very few of those types of camera around, so even if you would do that kind of functionality, very few people would be able to take advantage of it, so it’s a little bit early to jump on that. We need more 3D cameras in the world. Otherwise, there’s some other techniques – it wouldn’t necessarily be facial expression – but there’s a company working on technology to be able to have your mouth … make the right movements based on the audio. That’s an interesting technology, but they haven’t figured out how to make it real-time yet.

What they’ve found is that regardless of language, if you make a sound, your mouth makes a very specific movement and a very specific shape, and they’ve constructed all of the internals of the mouth and know exactly what your tongue and your cheek bones are doing in order to make that sound. Right now, not in real-time, but they’re working to get there. so then we could get the mouths to actually react to the sounds that you are making through the microphone.

So over time, more and more of this will come, but today it would be difficult to do something that would auto-magically make it work for everybody.

0:13:19 EM: the Asus Xtion … whatever it’s called … I must say I picked mine up on Amazon, so those things are out there. The only problem I can see with a 3D camera like that is that if you’re also plugged-in to an Oculus Rift … it’s not going to work. But the audio solution would be great.

EA: Yeah, we’re staying in touch with the company that’s working on that. They’re out of the UK, I think. So we’ll see when they get to real-time. For now, game studios use their tech to give game avatars a really realistic mouth movement based on what they’re saying, but that’s all “pre-done”; to do it in real-time, they have more work to do. We’ll see of that could become a solution. And ultimately, a lot of people will have 3D cameras, so that we can start to invest in 3D facial expression that way.

I’ve also heard the issue you’ve brought up with Oculus; they’re noodling with having a camera inside the Oculus, but it seems a little weird to have a camera facing your eyes to track eye movements …

I know they want to solve for that, because obviously if you’re looking at someone, or even knowing where a person is looking in the experience could have a lot of useful features associated with it. Even if you wanted to select something with your eyes, or go somewhere or just look at someone for that personal connection. I’m sure they’ll noodle on that, but how long it takes for them to figure that out and then for people to have Oculus in volume? That’s another thing we have to wait for. But [there are] lots of people plugging away at these problems that ultimately it will help us as well.

0:15:08 SW: Would it be possible, when innovations are introduced for new users [to have] some of the new user groups test them and alert you if they see any problems in advance?

EA: We do release project viewers, just like we did with the Oculus release; that went out in a project viewer, I would say way before it was ready for mass consumption, so we do have a process for rolling-out project viewers where people can try things in advance of it being rolled-out grid-wide or to everybody.

SW: I was thinking less viewer and more say, if you set-up a new, new experience island for newcomers, perhaps get a couple of the new user groups in different languages to try it out, to say, “this is cool, but in Japanese it doesn’t work,” or whatever.

EA: Yeah. We’re obviously doing a handful of A/B tests on our welcome, with and without greeters, with and without audio, and seeing what works and what doesn’t work, and I think we’re getting close to having some metrics there, but there’s some surprising results, but nothing obvious that’s a winner yet.

And then we have also talked with some groups, and maybe Pete can help out and tell me exactly who it was again, but we’re working with some groups that are doing some user-generated welcoming experiences. And when those are up and running then we can send some traffic to those, and do an A/B test as see how well they do with conversion and retention compared to the experience we have, and if someone else can come up with a better one, then that’s good for all of us. So we’ve already committed to sending traffic to some of those, but I don’t know what the ETA on that is, but that’s something we’re working on.

Then for the future, we’re thinking really hard about how the on-boarding to the next generation platform will not necessarily be to have you go through this one place because we want to make it easy for the creators of experiences to bring-in an audience directly into their experience. Then you bypass the idea of everybody going through the same on-boarding experience, because now every experience is potentially the first experience for someone, and how do you make that work?

I think that is more interesting when it’s easier for creators to market and attract directly into their experience without the crooked path you have to take today to ultimately end-up in an experience. Then we have to think about some new user experience stuff that is like overlays or something we can share with experience creators so that we don’t have to force all their users through this one funnel to work, like we’re doing today.

SW: I think you’ll find plenty of volunteers who would develop stuff for you.

EA: Absolutely. And then as long as we can be helpful and provide the metrics of how is the on-boarding funnel working for you and your experience and for your users coming into your experience. And how does that compare to other experiences, and how can you go and look at what they are doing that you could learn from; and now everybody’s trying to on-board people in their experience, and we can then help people understand whether they’re performant or not.

0:19:04 SW: I’d love to see that come back into Second Life too. I think there would be people willing to do it for Second Life as well as the new platform.

EA: Yeah. so we mentioned that. I don’t know exactly when we’ll have time to give it sufficient energy to really get it off the ground. We’re working on a number of other initiatives right now that are ahead of it … and it’s one of those things that’s near the top of priorities for Second Life to bring back the idea of the community portals or something like that, where it’s easy for experience creators to attract users directly into their experience from the outside world.

Because ultimately, there are too many experiences in something like Second Life that we can’t mass market to all of these niche experiences that exist. we don’t even understand them all or know that they even exist. Whereas the creators of that experience have a very clear idea of who they’re trying to be useful to and attract an audience. And so we need to give people the tools so they can attract their own audiences into their experiences.

[Commercial Break]

0:22:05 EM: Ebbe, we were talking earlier about our location here on Matanzas, which is a very much-loved location that’s grown over the years . Have you found any special locations where you see that special commitment by residents?

EA: Several, like Berlin is amazing to me. It’s funny. My son actually did a little bit of contracting here, helping the product team with some in-world research. He spent some time in Berlin and interviewed Jo Yardley, and just listening to him talk to me when we were driving down to LA together the other day [about] how it’s grown over the years and the incredible community engagement around that experience and how they’re sticking to a very specific design and community philosophy, and it’s working and the residents in that community love being there, and there are many of those.

And that’s ultimately, I think, the combination of these creative capabilities we provide and then the community capabilities that we provide, and you can get these incredibly social experiences which just make it so powerful. It’s not just games; it’s places where people live and socialise and eat and drink and dance. So that’s something as we think about the next generation platform, we think really hard about how we get this combination of creativity, community, communication – how do you get the right combination of those things? And commerce, of course.

So I’ve gone to a lot of experiences where I’m absolutely blown away by not only the creativity of the experience, but the incredibly strong communities that are a part of those experiences.

0:24:17 SW: And it’s wonderful the way they can be international and draw people in from all over the world, and they’re sharing this space.

EA: Absolutely. So I think that’s the kernel. Enable people to create a space, and then invite people into that space and social within that space. That’s the core, and then as we add more capabilities and more interactive capabilities, you can make for more interesting shared experiences. But at the kernel, it is enabling the creation of spaces [and] the inviting of people to those spaces and then socialising within that.

0:25:00 SW: The flip side of that is that, I’m sure you’re aware, residents can be made very uneasy about changes, because they’ve got their communities together, and changes can worry them. Sometimes that’s because they’ve been very inventive in using the technology, so necessary changes can have an impact in unexpected areas.

EA: Yeah, of course. And for some people in Second Life this is not just a hobby or a toy, it’s their livelihood. So obviously, any changes we make can have a substantial impact on the users of the product. And I think that over the years, Linden Lab has done a pretty good job, you know, eleven years in, to spend as much energy as they have on making sure that things are backwards compatible most of the time; that we don’t constantly break stuff, and that things that have been created over the years continue to work. It takes a lot of effort.

And that’s one of the challenges we cut the cord on right away, pretty much, with the next generation platform. We said that we are not going to try to figure out how to be backward compatible with all of the content that’s been created in Second Life. That would put us in too difficult a sport right off the bat. but that doesn’t matter, because Second Life will be around for a long, long time for people to continue to enjoy what it is.

We don’t have any plans right now to do anything that would be destructive to what you can do in Second Life today. So it’s mostly just improvements we’re talking about, not extreme changes to anything that would jeopardise the content or the creations or people’s livelihood.

0:27:07 SW: Have you thought about – sometimes things can come out that have unexpected changes. I suppose you probably don’t know in advance where those problems are going to hit, and I was wondering if you’ve ever thought about having user groups to run things past, so you can avoid problems like, say the Terms of Service?

EA: I don’t know if you could have avoided the Terms of Service – and there was quite a bit of engagement, and a lot of voices heard. I wasn’t here, but I think the way it was rolled-out created more complications than what the change actually was. But obviously, being in touch with the community, understanding the needs of the community is critical, and that is something we cannot do just by watching, But sometimes for us just looking at the metrics might be more efficient for us to get to answers rather than talking to individuals.

That doesn’t mean we shouldn’t – and we do – obviously, we have a lot of way for you all to provide feedback to us, and we’re trying constantly to make that easier to provide us [with] feedback. We’ll actually have something coming up – it’s a small thing – but to just make it even easier for people to just provide us [with] feedback.

And we opened-up JIRA again, so that people can log specific issues with us, and that is now monitored and checked in real-time, so there’s no backlog there; we’re constantly on top of that to make sure that we understand what’s going on out there. so any time you see issues and making us aware, whether it’s hitting me on Twitter – although that’s not the best way to talk about bugs!

But we listen to all of it, and as we roll out the next generation stuff, it’ll start early; we’ll only port some use cases, not all use cases that we all expect and are dependent on in Second Life; but it’s going to be extremely critical for us to be very close to the community in a feedback loop and hearing what people have to say.

We get a tremendous amount of feedback on Second Life in what people want and don’t want. Whether it’s us interviewing people or meeting in groups, having sit-downs like this. I try to connect with people either inside in some official capacity or just cruising around and talking to people. I think we have a pretty good idea of what’s going on, so I don’t think we can assume we’re just blindly doing crazy things … although it might seem that way at times, and that oftentimes has more to do with communications than what we’re actually trying to achieve.

And because there are so many different users with so many different use cases in mind, almost anything we do will be meaningless to some, and critical to some others. So other than just making it more performant, that’s something everybody can agree on, other than that, there’s probably nothing you can get everybody to agree on. because everybody has very different needs, depending on what they are trying to achieve.

[A note on the JIRA: Grumpity Linden recently confirmed that the majority of JIRA bug reports are triaged by the Lab within 24 hours of receipt, which a triage of new feature requests is performed every two weeks.]

0:30:50 SW: I think an almost universal one would be group chat, which I think has probably proved a tough one to crack.

EA: And that’s a performance issue, right? No, there are also features there … once you get into many groups and you’re trying to manage communities, there’s plenty of functionality we could add to make that easier.

But the performance issues of the lag in chat is something that a group of people here worked on for quite a while. It’s only of those Second Life old technology things where you just start pulling at a bit of string and it keeps going and going. So I don’t know when we can say definitively that we’ve solved it and you’re no longer going to see chat lag, but I already know we’re better today than we were a few months ago, but I’m not sure how close we are to being able to say, “it’s solved now, there are no more problems” … because we keep pulling that string, and we don’t know how much is left. It’s a little hard to predict when it’s going to be sorted, but we’re actively working on that one for sure.

[Those interested on the Lab’s work in trying to improve group chat can keep abreast of progress using the Group Chat tag in this blog.]

0:32:04 EA: And we have some other goodies coming. Performance-wise there are a couple of projects I have high hopes for. It’s very hard to predict what performance gains it will have, but we’re working to use a CDN [Content Delivery Network] for texture and such, and we’ve seen some really interesting performance improvements there, how quickly things load. And we also have a project [with] all of the HTTP pipelining stuff – so basically consider it the networking of how we communicate within the client and the server and how effective we are at getting the right chunks of data moved across the network, and we’re in a long project. I think we’re getting close, and both of these things should be, hopefully out within weeks, if not within a month. I just cannot say how much performance improvement, combined, it will provide, but I’m optimistic it will be noticeable.

0:33:15 SW: Well, this show won’t be going out for a couple of weeks, so it may be even in time for this show.

EA: Well, I’m not sure two weeks; we’ll probably have project viewers … some of these things, certainly the HTTP pipelining stuff has some fairly substantial changes in the code base and we have to be very careful and move that out across the grid very slowly. But hopefully within a month we’ll all notice that things are a little snappier.

[Again, for information on the status of the CDN project work, which initially encompasses texture and mesh asset data, please use the CDN tag in this blog. For information on the HTTP project work, including the HTTP pipelining, please refer to the HTTP Updates tag.]

0:33:51 SW: It’s really good to see new tools being introduced like the experience tools. It might be fun to cover that in a future Designing Worlds and take a trip to the Cornfield.

EA: That’s still in beta [Experience Keys / Tools] and we’ve expanded it to bring in more creators, which is positive, because it indicates it is working, and we keep listening to the feedback as these creators use these new tools, and I’m looking forward to seeing some of the creations coming out after that. Loki had a cool new experience that I haven’t gone back to since they said they made it work right. and Madpea’s working on something – there are lots of good groups working on leveraging the Experience Keys, which will make for more interesting experiences.

0:34:55 SW: Something that people have talked to me about again and again, is there any way people could have second names in Second Life again?

EA: It’s on the list of Second Life things that I know both Oz and Danger would like to tackle; I don’t know what exactly or when exactly. I just know it’s high up on the list. So it’s not something we’ve said “no” to, I just don’t know when we can say, a) yes, it’s now an active project, and b) when it would come up, and c) exactly what it would be.

But it’s clearly something that the team would like to solve. I just don’t have any more information than that right now, because everything that is below the thing that we’re actively working on right now, gets a little fuzzy until it actually becomes an active project where people are actually working on the designs and the specifications and the code; they you start to have some pretty good predictability. So it’s sort-of in that next set of things that we would like to tackle, I just don’t know how many other things would get in the way.

0:36:15 SW: I have to say that just to hear you say it’s on the list will make a lot of people very happy.

EA: Yeah, and I thought we made the list somewhat transparent, so I’ll have to check and see. Maybe we didn’t share that list completely, but I’ll see where we’re at on that to make you aware of what we’re at least considering.

[Programme Break]

0:40:40 EM: I’m hoping we can talk a little more about the plans for the new platform. As we understand it, you’re planning on it being massively multi-user. Is it possible to share any thoughts on how that can be achieved and then maintained?

EA: Well, achieved and maintained. So, we’re quite early here. Building something like this obviously take quite a while. I mean, just look at the amount of effort that has gone into building Second Life as we know it. And so far, progress is good, but it’s far from a world yet. It’s technologies starting to come together.

And we’re working in a different way to make sure that we’re fast and agile, and so we’re learning a new way of working together. We’re about four months in, although some of the technology had been worked on a bit before then, but in earnest we really started to devote and put significant resources on it and really go after it about four months ago.

We’re probably three of four weeks away from having our first internal mile stone, which we had hoped to achieve sooner, but it’s really hard to predict so early in these big projects. And the first big mile stone for us will just be the ability to import content, script some stuff up in that content and then cruise around as an avatar in that world. We’re getting close. There’s a tremendous amount of technology that need to go into just doing that; just the networking, the physics, the lighting, the import pipeline, the server technology, client technology, cloud technology – so many things that we’re doing from the ground up.

We have a pretty strong idea of what we want it to be. Like I said, it’s going to be in the spirit of Second Life, in that idea of the user-generated content, creating spaces, places, and things within it. And then making it easy to invite people into those spaces and communicate and socialise within those spaces. That’s the core of what we want to solve for, but to do it is a way that the visual fidelity of the content, the performance, the scalability and those things just go to a place where it would be too hard to get Second Life to achieve those things retroactively.

So we’re now working hard to, now we’ve defined that first mile stone and we’re close getting closure on that first mile stone, the next mile stone will be at the end of the year, and we’re still figuring-out what things, exactly, we want to achieve in that time; that’s coming up here over the next couple of weeks.

But we’re also looking out to what will the first, at least a little bit public, release, an alpha release which might be invite-only for certain use cases, when can that take place. And we’re trying to aim for somewhere middle of next year, where for some use cases it would be useful to some users who would then like to engage with us and start testing that. And the types of users that would be useful depends a little bit on what types of experiences we can get ready in that time.

For example, if we can’t get avatar customisation really nailed-down by then, the entire fashion industry and the strong avatar identity use cases maybe take a back seat for that release, and it could be other use cases that make it easy, let’s say, for an architect to bring in content and visualise their experience and then invite people into that experience, where maybe the way your avatar looks is not super-critical.

But it’s still so early; we’re still negotiating what things we could get done in that time frame; but we’re trying to define and focus our energy not on what the end is going to be, but what is the beginning, and how do we make the beginning interesting for users. First we have to paint the picture of what the end would be, now we’re backing up and saying, “OK, what does the beginning of getting their look like as far as getting real users trying to interact with this thing?”

We obviously still have the goals in mind for making it cross-platform so that it’s useful on mobile as well as PCs as well as HMDs like the Oculus. It’s a very big project; but I’ve already seen this little test world that we have, and I look at that little test world, and I go, “You know what? I haven’t seen something in Second Life that looks that nice.” So even though it’s this early, you can already start to see that we have some advantages already this early on. But it is very early.

We want to get out as soon as we can for some useful cases so that we can start a more interesting dialogue with users about what it is and what it’ll be and how we can go down the path together to make it become what we want it to become.

0:46:48 SW: It must be very exciting for you to be looking at it and thinking, “this is mine! This is my new world!”

EA: Yeah, but at the same time we have the luxury of a lot of prior experience and a lot of prior art. So in some ways it’s not like we’re inventing something that has never been done before; we’re trying to create something that is a very strong evolutionary step forward over a lot of things that have been done before, but try to figure out how to do it in a way it that could be much more broadly appealing and much more performant, and ultimately, enable even more incredible creativity and engaging even larger and more creative communities. It’s going to be a fun ride, that’s for sure!

0:47:48 SW: I was wondering if you had any thoughts about how you’re going to be marketing this? I’m not really thinking ads here, but I’m thinking more demographics; the kind of demographics you’d appeal to, because most ne games are targeted at a younger demographic, and mainly at a youngish male demographic, which certainly is not true of Second Life. Second Life clearly appeals to an older group, and a larger proportion of females than some games. So is this going to be a target demographic for the new platform?

EA: Well, I think that because the intent is similar, I think we can do something that will make it easier for more use cases to be solved. If you think about it, today it’s quite hard to say, “I want to create an experience for me and my team” – whatever the definition of your team is, whether it’s your community, your company; whatever team it is – “I want to create that experience and invite those people into that experience and then socialise with them or do things with them in that experience.”

It’s non-trivial to just make that happen in Second Life today. I mean, even just to configure your land to be accessible by only these people … it’s a bit of rocket science to just do that … And the path to get someone from outside this world into that experience is kind of a crooked path. I wouldn’t say it’s the easiest way to say, “Hey, you there, friend of mine, or customer or potential resident, come into this place.” It’s not an easy thing today, so I think there’s a lot of ease-of-use we can do better …

But your question of who we will market to? We have to think about whether we want to do it very differently for different types of verticals, because I can think of lots of different use cases. I mean you can think of lots of incredible use cases for just architects alone. To be able to just import your model for what this new hotel, house, whatever, is going to be; landscape it, script it up, and then be able to invite potential clients, potential partners, to come an experience this space together and socialise within that space around that thing.

That, to me, seems like a fairly basic use case, but Second Life is not really used like that today, because that is a little too difficult for them to do. so then you could really start to target them; and you start to think, “OK, what about education? What can we do to rally blow-out the educational market? What can we do to blow-out entertainment? You can slice those up in lots of different ways as well. and it’s a part of the challenge; it could almost be anything for anyone. How do you market something that could be anything as opposed to something every specific.

But we have a long way to go to even think about naming, branding, specific target audiences over time; which target audiences as the platform matures. And [for] some things, you’ll be better-off in Second Life for some period to come, even after this thing starts to come out, for some use cases.

So we don’t need to compete with Second Life early on. Things that work great in Second Life – keep doing that there, and we’ll grow into more and more use cases where people in Second Life can more obviously start to make a decision on which side they want to play on. but I think it’s still so far out, that it’s hard to answer that in a precise way.

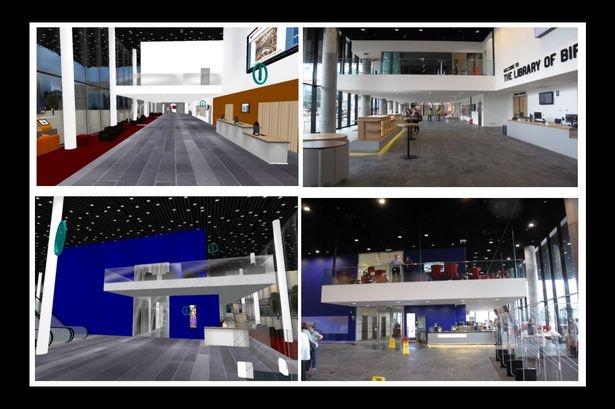

0:52:10 SW: We visited the City of Birmingham library a few seasons ago, and they actually did what you’ve been talking about. They set-up a virtual world City of Birmingham library as they were building it. The architect set it up, and the librarians came in and walked around the model and were able to say, “well, I think there should be more comfortable reading chairs here,” and, “we need desks and tables here,” and they helped the library evolve and the uses of the library evolve, by exploring in Second Life.

And when they got into the real library, they hadn’t built it all, they’d built a lot of the public areas, and they said that the librarians just moved with complete confidence around the public areas that they’d been walking through in Second Life, because they knew where to go, they knew what it all looked like. But everyone got lost in the office section, because the office section hadn’t been modelled in Second Life, and everyone got lost there! But the rest of the Library that had been modelled, they just walked around with complete confidence.

EA: That’s brilliant! We should just package-up that learning experience and make everybody aware, you know, “Don’t design anything until you’ve tried it out in Second Life!”

0:53:39 EM: Now Ebbe, I wonder if we can talk about the decision to go closed-source for “2.0”. what’s the reasoning behind that, and do you think going with open-source for SL was a bad move? I’m wondering what the motivation is, whether it’s to do with something like protecting IP rights, and stuff like that? Sorry, that’s three questions is one, isn’t it?!

EA: I think it probably all relates. I think for starters, it’s simplicity. We want to do a lot of iterative, fast work. and you want to have some level of stability and process in order to go open-source, or you’re just going to be jerking people around. And we also believe that we want to make sure that we try to approach our viewer in a more open way, in that we want to make it easy to extend.

A lot of the innovation that third-party viewers have done, I think could have been done on top of our viewer if the viewer was just extensible; call it with plug-ins or what have you. so there’s a lot of applications with models like that, which allow for a lot of customisation and extensibility without being open-source.

So it wasn’t that we didn’t want to go open-source, it was a) that we wanted to not add extra labour on ourselves in the beginning, until things start to take shape, and we have a solid foundation; and b) we want to focus on extensibility and see how far can that take us, and how many of the [options] that open-source viewer have been able to provide to the community could be provided that way. And it might be less cumbersome and provide just as much value to tackle it in that sense.

So that’s kind-of why we started this way. And if we find over time that we would get more mileage out of open-source, then that’s an opportunity that we have to consider in the future. But for now, we didn’t want to add that extra complexity.

0:56:02 EM: That leads-on to another question, which is indeed what the role of the people who today do the third-party viewers will be in the “2.0” version? Is there anything you can tell us about that? Presumably, it’s building on that extensibility.

EA: Yeah. I mean most of what they’re doing is creating new user interfaces and new features that actually work against the back-end we have, and it’s just features stuff we decided we didn’t want to do for whatever reason, because maybe it was more vertically specific to a smaller audience – which doesn’t mean it’s not relevant, it’s just something not everybody would necessarily use. sometimes if you try to put all the features in one product, it just becomes too much.

So I don’t think the viewers necessarily did a lot of innovation in the back-end of things; it’s more of the front end. and if we can enable them to provide those solutions to customers and for specific use cases that we don’t necessarily focus on, without have to do all the work of an open-source viewer, it might actually be more efficient for them to provide that type of value as well.

0:57:16: Are there any more thoughts coming out on the ways to transition users and creators? There was a big explosive issue when news of the new platform was announced; people are very concerned about their inventory that they’ve invested in, and how much they’ll be able to take.

EA: So what we said was, don’t bank on full backwards compatibility for all content, because like I said earlier, it would potentially put an extreme burden on this new product and we would just spend more time just getting back to where we are versus being able to focus more energy on moving into the future.

And we’ve said that mesh is something that is very likely to be importable, we’re working on that already. so people working in mesh should be able to leverage their assets to a large degree in the future.

Scripts – we’ve said it’s going to be a completely different scripting language. I’m sure there are people out there who will think of ways of creating converters for various things to bring more things over more easily. But we’re trying, in some ways, to simplify things for ourselves as well as not constraining ourselves by just thinking about what would the future be if we didn’t have to think about the past when it comes to content and content creation. And then over time, we’ll be able to provide more information on how existing work can be brought into that place.

But it’s also going to take a tremendously long time for the new place to be able to offer the capabilities that this place offers. And so for a long time, for a lot of creators, this will a more interesting and a better place to be. For some use cases and for some users, they might find they can spend their energy in the new world versus this world sooner, but it will definitely be more narrow use cases in the beginning, and over time they will broaden and broaden, but I’m not trying to pressure anyone to pack-up and leave this place.

This place is great, stay here for a long, long time. We don’t even think about how to transition people in some specific time frame. If three years from now, this is still a better place for people than the new place, then so be it.

We have said that we would like to make it [so] it’s easy for you to move back and forth using your identity and your Lindens, so that you can hop back and forth, and do what you do here, the place you enjoy and love, and then go over and experiment and play over there. And maybe you’ll say, “Well, it’s not ready for me yet”, you’ll come back here; or you’ll say, “Hey, that’s interesting. I’m going to spend more time over there than here.” It’s up to each and every one. So that’s kind-of how I see it.

1:00:42 SW: I have to say, I doubt whether I’ll be using an awful lot of my current inventory in two years time. But you never know; there’s always going to be bits that are necessary; old issues of the magazine, for example.

EA: I’m sure there’s a lot of interesting, valuable assets in this would that would be possible to leverage in the new world, but I just don’t want to set any expectations, because we’re not thinking how to solve something like you can just pack-up your entire region, and, boom! You’re in the other place twelve minutes later, and everything looks the same. No, that’s not really the intent.

We’re going to continue to make Second Life better and faster and more performant in addition to the stuff that we’re doing with the next generation stuff, so thinks will improve here, and I think we’ll all learn and discover over time what thing works the best for what use case.

And if the new thing ends-up being a superset of everything we’re able to do here, and people actually rather quickly move over to take advantage of some new things, then that’s one outcome. Another outcome could be that the new thing doesn’t solve some things that are critical for people who are in this thing for a very long time for various reasons, whether it’s hard to do or it not being in our interest to solve those particular use cases in the new world; because we don’t want to assume it’s going to be just a pure superset of everything people appreciate in this world, but it could be that that’s the case.

We’re obviously looking at all the things one can do in this world and trying to understand if and when someone could do that [in the new platform]. But like I said, it’s a long project, and I think it’s easier to see it and take it as it comes and not have to stress about end date here, or anything like that.

1:02:59 SW: Like we can make TV programmes here, and obviously we’ll want to make TV programmes in the new world, too.

EA: Yeah, of course. But when we would make that work versus something else, I can’t say. Maybe on that one I could say, but not off the top of my head! someone here might know, “Oh you can do that!”, you know …

1:03:25 SW: If we can use FRAPS, we can probably do it! … I was going to ask have you given much thought as to how you can establish community on the new platform? I know that you’ve taken on a new tranche of software engineers to build the platform; do you have any community outreach people working with them and looking at issues of building community at this stage?

EA: Not actively with regards to that project, but we watch it and see it and participate in it in Second Life all the time; including something like this. So it’s something we’re obviously quite aware is a critical component, like I was talking about earlier, it’s making it easy to create a space, an interactive, interesting space; easily bring people into that space, which is not true in Second Life today, and then easily communicate amongst each other and socialise. Which actually is quite easy in Second Life today.

So that’s a core that has to be true in the next generation product as well. And if people can communicate and collaborate, and if we provide the tools to make that collaboration and communication effective and easy, then communities will form, whether you want to or not (chuckles). And I’m not saying it’s just that simple, “build a tool and they will come”, there are also things we can do. But ultimately, most of the communities in Second Life are successes of the communities creating the communities. It wasn’t we [the Lab] who created the communities; we provided the tools and capabilities that enabled communities to form. So I would give more credit to the creators of communities rather than us when it comes to all of these successful communities we have today.

1:05:39 SW: So you’ll be talking to leading community people about ways in which stuff that they’re really going to need to build communities, what would be really helpful for them to build?

EA: Yeah, yeah. Of course. So builders are one kind of user, consumers are one kind of user, entrepreneurs are another type of user, and community builders are sometimes another kind of user. Sometimes you have one user being all of those; sometimes people are very dedicated or one or the other. They don’t build or they don’t do entrepreneurial things, but they are community leaders and they create communities.

So we have to understand each of those types of users, and what we can do to empower them and enable them to be successful. So obviously, being in touch with strong community leaders and what they’re trying to do to create successful communities and listen to them and understand what it is that we’re doing that’s enabling or preventing them from being successful in their endeavour of creating strong communities, is something we have to be dialled-in to.

We have the luxury of having a lot of successful community leaders in Second life, small and large and all around various activities. I mean, there’s so many of them in the education space, in the non-profit space, in the role-playing spaces. So we have great luxury with having within reach, inside Second Life, a tremendous breadth of experienced people we can work with to make sure that the next product empowers them in ways that they can be successful.

… And this is what’s so fascinating there’s so many different types of users and customers and use cases that we enable, things these days – the applications – tend to be more narrow and very specific. and it’s a strength we have, but it’s also a certain amount of complexity we have. It feels sometimes we have to re-implement all the things we take for granted in the Internet at large inside this one things. Building communication tools and social networking tools and creation tools and monetization tools. Sometimes we’re, like, oh, we’re eBay, or we’re PayPal, and Maya and Skype and whatever, with a team that’s a fraction of the size of each one of those companies that focus on that one thing. And we have to figure out how to package just enough of each of those components to get sort-of a functional space and place and community and society like Second Life, and it’s quite something.

1:08:51 SW: Have you ever seen … Loki Eliot’s … its video … about the little robots at Linden Lab and all the hard work they have to do?

EA: Send me a link to that, I don’t think I’ve seen it! Obviously, I appreciate what he’s able to do. He’s obviously a very powerful creator. I was just a few weeks [ago] over in his place where he’s working with Experience Keys to create a little bit of a game experience where you walk around and collect things. When I was there, they were just hours away from fixing some bug, so I was supposed to come back, and I haven’t been back yet; but I think it’s been up and running now for a few weeks, so I’ll have to go back and check that out.

1:09:56 EM: By the way, is there a term we should be using to refer to the new product? Is there an official name? We never know what to call it!

EA: We have a code name internally, but we don’t necessarily want that to leak out, because a) we’re not sure that it’s a term that’s appropriate. I mean, you have to do some work to make sure that – if we picked a code name like “Tahoe” or something, you do a common place or something like that, then you can get away with it. The code name we’re using isn’t like that … we haven’t fully checked to see if it’s a legal, valid code name to be using in a product sense.

And also, it can start to create a brand ahead of itself, before it’s been thought through. and so now you have to figure out how to unwind that name. So that’s why we’ve refrained from starting to call it something … either we create a code name that’s clearly a code name and will not take off, or if it’s going to be an interesting code name, it’s probably better if it’s the actual name. And how long it’ll take to come up with that, we don’t know. For now, we just refer to it as the next generation platform … that’s where we are.

Pete and I, we’re talking to naming and branding firms and stuff like that to figure out the process of how do we come up with a name and a brand and an execution that all makes sense. But it’ll take some time; and if we have to come up with a code name in the intermediate, it’ll be something [like] we’re calling it internally.

1:12:10 EM: And it kind-of leads on to something else I’d like to ask you, if I may. This concerns not only the next generation platform, but also the one we’re in now, Second Life. Do you have any plans or thoughts on improving the intellectual property protection situation in virtual environments you’re involved with?

EA: Well, first of all, I think that we’ve done a lot! We’ve done more than most, if not more than everybody to try to make sure, to protect people IP. And it’s a really difficult battle; there is no copy protection schema or IP protection schema on the planet that actually really works, completely air-tight.

And as we know, people have worked out how to rip people off in almost everything that there is; you can look at the music industry trying to fight this battle, or anything, or the software industry. Everything gets copied and cloned, and then you look at all of the effort that these companies put into trying to prevent this from happening, which gets to a point where it drives users crazy; you have to type-in 47 digits to unlock my thing, and license keys – I mean, it can get really complicated and actually get in the way of things.

So it’s a delicate balance to try to make the things simple and easy and still give people sufficient protection that they feel it’s worthwhile to spend energy to create and develop, and to sell and distribute and not have all their work instantly being ripped-off. But no matter what we do, someone’s going to figure out how to rip it off, and it just happens. If you want to just read bits and bytes off the graphics card, at some point it’s almost impossible to stop someone who really wants to steal.

And as far as language? Do we really want to talk about the whole ToS again?

EM: No, no, no.

EA: OK, but we’re trying to make it clear to people that the content is yours, and we just need to have sufficient protections to protect ourselves. But again, it’s obviously not in our interest to make a mess for content creators by ourselves stepping in and starting to be part of the problem, rather than the solution with regards to IP protection.

So as we think about the future, these notions of No copy, No Transfer, and things you can set on things and managing that. We’re thinking about those kinds of problems for the next generation platform, and do we need some of those things from the beginning? Can we introduce some of those things later on? Should they be done in a similar way, in a different way? we’re still having conversations about those sorts of things.

But ultimately, it’s in our interest to have creators able to be incredibly successful … Everything we do is dependent on creators being successful. And “creators” is in a broad sense, whether you’re en entrepreneur or an actual creator of content or a community manager. We want the users to be successful on our platform. And if they don’t feel it’s worth their time spending energy creating experiences in here and running communities because it can get destroyed or stolen or whatever, then it makes it impossible to be successful , then we’re doing something wrong. That’s the core.

1:16:15 SW: You want all boats to float.

1:16:17 EM: As we gently draw towards the end of this discussion, and it’s very, very kind of you to spend so much time with us, I was wondering if we might strike a more personal note for a moment. I think one of the things that has struck and delighted residents, is your clear excitement about Second Life and all its possibilities. What is it that drew you to this role, and what particular things do you think you bring to it, if I may ask?

EA: So I think a lot of it has to do with my upbringing and the types of things that have interested me over time. I very much have, call it a right brain / left brain thing – although I think that’s been dispelled as a myth, but anyway, the analogy still works! – where both very technical and logical thinking is something I enjoy a lot, but then also creativity and art is something I enjoy a lot … and this goes all the way back to my parents and the things they did, and we did together.

But what I ended up doing in college ended-up being natural for me; I studied computer science and fine arts. So I would go from the computer lab, sitting all night writing code … to the art studio, where I would paint and draw and sculpt and architect, and photography, and then back to the computer lab, writing code, and I would be equally happy in both places.

So something like Second Life, which is the breadth and depth of the use cases and the types of users and what they’re able to do, and to enable that, and then seeing this intersection of technology and art is just to me … I couldn’t think of a more interesting place be, to just constantly stimulate my brain in thinking about all the possibilities and all the things that it enables and empowers.

I’ve always also sort-of enjoyed tools or platforms that empower others to do things. Whether that’s to sell what they know or to write or create; the creative toolset has always been interesting to me. So I think it’s all the ingredients that you want to have to be excited and have fun and [to be] challenged and stimulated are all there for me. This is like the perfect smörgåsbord of possibilities and capabilities; it’s the most fun I can imagine having.

1:19:30 SW: Fantastic! One of the things I always find fascinating is, we’re doing this interview and the most natural thing to do with this interview was to find seats and sit down to do it. I mean, there’s no reason at all why out avatars have to be seated. Yet somehow you feel you need to sit down to have a chat like this.

EA: Yep … I feel that I’m having a better conversation with the two of you, sitting with you here than if we were just doing this over Skype right now. It wouldn’t work as well for me. And I think that’s true for most people; we just haven’t made it easy enough for people to experience something like this. And this here, what did it take for me?

Well, someone had to create this beautiful space, and most people will not create spaces like this; they will take advantage of creators who will create these types of spaces. So all I had to do was come here, figure out how to navigate a little bit and sit down, and then start talking. And it’s just not that simplistic just now. If I were to send a meeting request to fourteen people who had never used Second Life, and say, “Come on in, let’s have a chat!” An hour later, we would still be trying to figure out, “Where did those four people go?” So there’s a lot we need to do to just make that just a natural, simplistic thing to do.

1:21:04 EM: Although there are actually people who claim to be able to get people prepared from a standing start in half-an-hour or less for attending an in-world conference, for example.

SW: Imperial College London, where they’re training nurses to come in and do stuff.

EA: But again, you have to have someone really motivated. and you said 30 minutes; if it takes me 30 minutes to get a group of people together in Webex or something like that – and usually it takes us 5 or 10 minutes in front of any meeting we do that’s not in Second Life; even sometimes inside Second Life when people are experienced with Second Life – but whether it’s Webex or Skype or Google hangouts, there’s always something that went wrong. Microphones don’t work, the camera doesn’t work, this thing, that thing.

And if it takes five minutes at the start of a meeting just to get that stupid Webex thing working again, people just go insane. So if it takes 30 minutes? People have already given up a long time ago.. but normal people? You might get a minute, then they’re done with you. Especially if you have to download something, etc.

And then when you log-in: now where am I? How do I get there? Yesterday, when I wanted to get into this thing, Pete had put the SLurl for this place in the calendar, so I copied that into my browser and hit Enter, and I go to the page that says, “Go visit this place”, and I click the button – and it’s not working. What happened? So now I know I have to copy the end of the URL and go into the viewer and paste it in the box … that would have been a complete show-stopper for another user.

So there’s just little things along the path, and there’s several of them, that we have to iron-out to make it very easy for people to come in and socialise like this.

1:23:14 EM: But once you get there, look at the benefits, and I would say being able to sit down like this and chat, it beats teleconferencing, video conferencing, faces on a multiple screen in front of you and PowerPoint at a distance, it just blows them out of the water.

EA: Absolutely. and even here we could make it better, because you just said that to me, but you’re staring past me; so if you were looking at me when you were doing that, or we can start to get eye contact and facial expression and body language; those things will come with time. But even now, with us sitting here a bit rigid, not looking at each other completely, and not being able to see our body language and facial expression, it’s still better than all those other things.

And that’s why I mentioned earlier … it’s great to hear groups like yourselves and like TechSoup that I spoke to a few weeks ago, where they’ve had meetings successfully with a large group of people for seven years running; and they couldn’t have imagined any other platform that would have enabled that. Just not enough people realise it, or are willing to take the time to enable that … so we need to lower the threshold it takes for people to have the pleasure of getting this type of experience.

1:24:39 SW: I think it’s looking really exciting as well. What I would love to see is people getting together and using Second Life 2.0, Second Life The Next Generation as easily and as smoothly as they use Skype. If you want to talk to your family, my step-daughter and her daughter live in Switzerland, so we talk to them once a week over Skype, and we have craning to try to look into laptop windows. I think it would be much cooler if we could meet virtually, and we’re used to each other’s virtual presence. I think that would be very cool.

EA: Yeah … I’m convinced, obviously, that it’s going to happen, and we’re in a great position of having enabled it for a lot of people who have had that experience and realised that this is a better experience. We just need to figure out how to continually lower the barriers of entry for people to be able to have this experience. And we can make it richer, too. So the future’s going to be very interesting and exciting.

1:26:06 SW: Finally, a change to turn the tables. We’re on our 250th episode and we’ve both been involved with other Second Life and virtual worlds media, Elrik with Radio RIEL, me with Prim Perfect, Happy Hunting, The PrimGraph, The Blackened Mirror, and all those sort of things. Is there anything you’d like to ask us?

EA: Well, of course! (laughs). Tell me all your wishes and needs and hopes!

EM: We’ll be in contact with a list!

EA: Please, please send us lists! Please send us your wishes and hopes. We look at it all; I read the forums, I go in every two weeks and spend hours and hours reading the threads on the forums to just get a sense of people’s sentiments and wishes and needs and things that they wish they could do but couldn’t, or things that they can do that they want to make absolutely sure that they will continue to be able to do …. So please, if there’s anything …

1:27:39 SW: Well, right now I’m going to put in being able to raise one eyebrow!

EA: That’s the beginning, huh? Just one eyebrow!

SW: Just so when we’re making The Blackened Mirror, our cynical detective, our kind-of Humphrey Bogart character, when the broad is trying to get one over on him, he can just lift a cynical eyebrow! (laughs).

EA: I’m looking forward to facial expression, and we discussed this earlier, but the hardware’s coming; there’s already companies out there that are solving pieces of this puzzle of doing a great job of providing all of the data about how the face is moving. And then it’s up for us to be able to grab that data and be able to animate the avatar in real-time, with your expression.

… And you can see that this is something that Philip is playing with in High Fidelity, and we’re looking at what they’re doing. and I think over time, this is going to be stable, that you do this. That the technology already exists, and it probably will take a little while to tune it and tweak it and dial it in to be just so.

And you will have some funny moments, because people don’t make sure they’re the perfect distance and facing the camera at all times. They move about and do stuff where you’ll have some glitches and whatnot. And then all the complexity of what if you want to do all that with the Oculus, now what? Covering your face and at the same time record your face; so lots of problems to solve. But I feel that one is solvable; it’s probably a bit harder for the rest of your body. It would be great to able to do your arms and hands as well … I mean how Italians possibly use this platform! (laughter).

So I’m looking forward to getting to that point. Like I said earlier, we’re trying to do just the basics right now, improving our mesh avatars in Second Life so that they do at least move their mouths and blink their eyes and not look so stone faced, which is just catching-up with the past. And then for the next generation, we’re still in the early phases of even just defining male and female avatar skeletons, structures, and the overall system for making sure we have lots of flexibility with regards to skeletons and what people can do with it. and then just starting to put all the things on top of that, and then on top of that, we now have something to start working on the next level of detail; of facial expressions and other devices to manoeuvre and control your avatars. So step-by-step; but I’m very much looking forward to getting to that point.

1:30:59 EM: I’ve got the Asus Xtion here, and the training system does a really good job, even if you’re wearing a headset, which is rather neat. And then there’s a Leap Motion sitting in a box over here as well, ready for (laughs) whatever comes next!

EA: Leap Motion; we speak with them every now and then. I met again with some of them at the Oculus conference just this last weekend. Unfortunately, 1.0 of Leap that we tried to do some work with … there wasn’t enough there to make it useful for people. 2.0 gets a lot closer; and looking forward to adding support for those types of things as well.

Hopefully someone does a really great job of some middleware, so that all kinds of input devices can be added on [rather than] us having to constantly be doing the special sauce for every one of those devices that come out. Because you’ll find, probably, a lot of different people preferring lots of different types of devices over time. The Holy Grail is one device that rules them all; or it might not be a device, but some input method. And it might not be that the one that really suits creators is the same that really suits the gamers that’s really the one that suits, call it the navigators and communicators – so it’ll be interesting to see; but ideally we have enough flexibility to allow people to use the input device of choice and interact a much as that input device allows in our products.

1:32:42 SW: I think it’s coming. Recently we did our first interview with someone who was using SL Go to communicate with us. and that was really interesting. she was in a hotel room and she wasn’t able to use a computer, she didn’t have a computer with her, she just had her tablet … so she used her Tablet and SL Go. And I think if she hadn’t told us, we wouldn’t have realised, would we?

EM: Absolutely, you’re dead right.

EA: Yeah. The solution is quite good. People had grumbled about the pricing early on, but that’s not something that I completely control. That company, they need to make their business work. but yeah, I’ve played with it, it’s a good experience, it’s a very performant experience, because they do a lot of the computing in the cloud and just stream things up and down and don’t depend on a lot of horsepower in the tablet itself … How did she find the experience? Did it work well for her?

SW: It did. I think she was delighted that she was able to take part. It was after Relay for Life; we always do a show immediately after Relay for Life looking at some of the build before they take everything down. And she thought she wouldn’t be able to come, because she was away and she was in a hotel room . but she tried SL Go and she found it worked perfectly

EM: We also had very good results when we actually tried it out ourselves. We did a programme on it, and we had pre-release stuff and tried it on a number of different platforms, and we were very impressed.

EA: It’s a great solution.

1:35:00 SW: Well, Ebbe, thank you so much for coming and joining us on our 250th show. It’s been great to have you here, and lovely to talk to you.

EA: It’s been my pleasure, I enjoyed it. And thanks for all the work you’re doing to spread the word about all the things going on in Second Life and to talk about issues and create awareness and understanding. So I really appreciate that.

[Episode wind-down.]

With thanks to Saffia and Elrik at Designing Worlds for the advanced look at the episode to produce this transcript, and congratulations to them and the Designing Worlds production team on reaching their 250th episode.

Interesting to note that Ebbe has no clue why the mesh starter avatars cause problems.

They cannot share clothes with the rest of SL, or even each other, and the fact that this is not considered an obvious root cause is… very interesting, and somewhat worrying for any design work for a future SL2.

LikeLike

4 months ago when CEO Altberg did his big bluff in the media they did have nothing. After his big bluff and the impact this had they started to code this new platform they bluffed about. OMG 1

Now they hope in 3 weeks they will be able to enter a blank world with a basic mesh uploader. That is all they have or will have in 3 weeks. High Fidelity has more than that already.

They hope to release an alpha for the selection of “friends” and “elite creators” and their “special friends again” by the summer of 2015 in 8 months.

New platform same “shady company with their shady business tactics” OMG 2

Then they do not really care where people go, either they go paying in SL2 or they keep paying tier in SL1, and if SL2 does not perform as expected they will evaluate their experiment. And if people leave SL1 in droves to SL2 and it all caves in so be it. But they understand a lot of people their livelihood depends on SL1 and they “care”. OMG 3

Interesting is that they can code up an entire new world from scratch in 4 months with a rather small team. They did not use one of the top game engines they coded it all from the ground up.

Think what they could do in two years time, for example coding everything back from the ground up for Second Life and not bothering their customer base like they do now. Just imagine if that Rod Humble had done his job instead of coding his little game projects for years.

The constant emphasis on how Second Life will be here for many many many many many years did humor me. Somebody tried to make up for his catastrophic blunder it seems.

Like Tali Rosca writes the most striking is that they do not really have a clue what residents do or why they use their product. Also interesting how “the son of the boss” gets to do paid contracting for Linden Lab. The son of the boss is just a kid.

I have strong doubts, I’m sure I am not the only one.

LikeLike

There are question marks hanging over the new platform and its potential for success, to be sure. I have my own doubts that it can reach the kind of active user numbers that have been mentioned (tens to hundreds of millions).

However, I would say the comparing the new venture directly with High Fidelity is a little unfair. High Fidelity have been actively building their platform and presence therein over the course of the last 18 months. The Lab appear to have only been building theirs in earnest for the last four (although its roots in terms of an idea appear to stretch back some two years).

“Think what they could do in two years time, for example coding everything back from the ground up for Second Life”

In some respects, that’s what they are doing. Any attempt to “code everything back from the ground up for Second Life” would likely result in a Second Life very different from the one we have now, with all the advantages of more modern capabilities and able to overcome all the various disadvantages of the current incarnation of SL. It would likely have elements of incompatibility large and small, just as the new platform will have incompatibilities large and small. It would likely have overlaps with the current SL, just like the new platform will have overlaps. But the result would likely be the same: a separate platform with its own distinct path to follow.

Will SL be around for years and years? Why souldn’t it be? Let’s face it, the future of SL lies as much in our hands as it does in anyone else’s.

If the majority of people in Second Life reject the new platform, preferring to stay where they are and continue to enable it to generate revenues sufficient enough to offer the Lab a profit after operating costs, etc., then they have no reason to shut this world down, whether or not the new platform manages to find its own audience and user base.

“The son of the boss is just a kid.”

Aleks Altberg is approximately 24 years old, a successful professional rally car driver (a winning driver with APR Motorsport) and rally training instructor, and is / was (not sure of his current status) an instructor at the Lamborghini Driving Academy. None of which, I would respectively suggest, is deserving of the pejorative use of the word “kid” to describe him.

Was there some nepotism involved in him working with the Lab’s product team? Perhaps. But equally, he’s also spent a fair amount of time in Second Life as a user, in both the Teen Grid and the main grid. As such, who is to say he doesn’t have as much awareness of the platform as you or I, and suited to forming valid opinions and feedback of the kind the product team were seeking?

LikeLike

High Fidelity does have a lot less funding and a much smaller team to code.

10 programmers at 18 months = 40 programmers at 4.5 months

Inara you are aware the huge amounts of time that went into the viewer alone, Linden is going to code a better one from scratch?

To give you an idea, gamers are complaining these days about how much they crash and get disconnected in Destiny. Destiny the result of years of coding and 500 million $. Gamers are not even hugely impressed by a 500 million $ project with ultra smooth physics, professional content and great looking shaders and lights.

I find it disturbing Linden Lab is doing its market research based on the findings of a 24 year old rally car driver, at least he is old enough to drink alcohol. There is a lot of promiss there. I am sure the head of strategic business planning at Linden Lab is an award winning bikini model that got hired for the “assets” she brings to the company.

One would expect a company with 200 people in staff would bother to hire a market research firm to do a deep investigation and come up with a big fat report about exactly what and why and by who. The “kid” went to talk with the biggest fan girl Yo Yardley and got paid for it because he is the son of the boss. To me that is wrong, very wrong.

Comparing with High Fidelity and the usual friend politics and corruption by Linden is not my largest concern.

From what I hear Linden Lab is building a new software platform for which there is no actual need and demand. The more I learn about this and the more information I hear I wonder why?

People can use their tablet and it works rather well, almost nobody does.

Some people use their mobile phone to log in quickly and chat, only a small amount is doing that, it is there and it works.

People use the Oculus Rift and say it is neat, it is there and it works.

Content is there, there is talent and the quality of content keeps improving.

Second Life only needs polish, work that should have been done in the previous 5 years. Lots and lots of polish. Besides mesh there have been no noteworthy updates in almost half a decade. Everything else was fail. I think Linden Lab will end up with the same userbase but spread over two worlds which will give them more costs to maintain.

The userbase should not even hear about this polish. People in world should not know about the technical updates a software company is performing.

Four months ago Altberg the bluffer: “We at Linden Lab are the leading virtual world makers and we are working on the next generation platform that will be used by the millions”

and the truth was they did not have a thing then.

The credibility of a company or organization is what makes it stand. Bluffer Altberg is not bringing either and you know that as well.

Linden Lab had a bluffer before by the name of T Linden aka Tom Hale. I remember him shouting how Viewer 2 was going to be the greatest thing on earth. Ow look thanks to the internet you do not need to take my word for granted. Here we have him in action

http://business.treet.tv/shows/metanomics/episodes/tom-hale

Watch how he uses the same big words “The Second Life Experience” or “The evolution of the platform” or even better “Second Life is an eco system for consumer and enterprise usage”. Today the exact same thing happens again: “The next generation product” and “The platform for experiences”.

I find it a shame the residents need to put up with all this.

LikeLike

High Fidelity have strong funding (primarily through True Ventures and Google Ventures) and the luxury of focusing on a single product without the need to maintain an current platform or user community. Their investors are happy (I assume) with whatever roadmap and time frames have been defined to them such that the company doesn’t appear to be encumbered by the need to bring their product to market.

The Lab certainly doesn’t have those first luxuries – they have a product they need to maintain and a user community they need to support. And while they also may not be under any pressure to bring their new product to market ASAP, they nevertheless have to continue to make a profit in order to remain in business. That’s inevitably going to impact where skills and resources are directed, at least until they get to the position of having the kind of staffing levels their aiming for with which to build the new platform.

“I find it disturbing Linden Lab is doing its market research based on the findings of a 24 year old rally car driver, at least he is old enough to drink alcohol. There is a lot of promiss there. I am sure the head of strategic business planning at Linden Lab is an award winning bikini model that got hired for the “assets” she brings to the company.”

Again, it’s a shame you have to litter your comments with pejoratives, as doing so does much to undermine your argument. However, we have no idea precisely what Aleks Altberg was asked to do, how long the work lasted, how involved it might have been, whether it warranted the involvement of a high-priced market research consultancy- or indeed, whether it was even related to marketing (Aleks worked with the product team, which doesn’t automatically equate to marketing).

“From what I hear Linden Lab is building a new software platform for which there is no actual need and demand. The more I learn about this and the more information I hear I wonder why?”